Just when you think you know shake up enough

Yester day I experienced my biggest shakeup ever since I started participating on Kaggle. I dropped from silver zone to nowhere. The competition is an image segmentation competition, and based on past experience, CV competitions has relatively low shakeups(compare to tabular/transaction/time series predictions). I have seen CV competitions with low shakeups even when there is obvious train/test distribution difference. So this comes a bit surprise, but honestly, it is not all that bad.

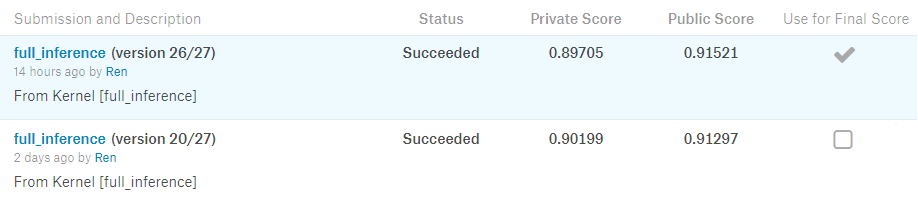

I have chosen a risky strategy by training models in full training set instead of using cross validations, and relying solely on public leaderboard for feedbacks, in order to fit 5 times more models from different architectures. This is actually all fine, my ensemble of 7 models with no threshold tuning based on public board still could retain my silver medal position. It was the thresholding that sunk my ranks. A lesson well learnt.